Portfolio

A Collection Of My Projects

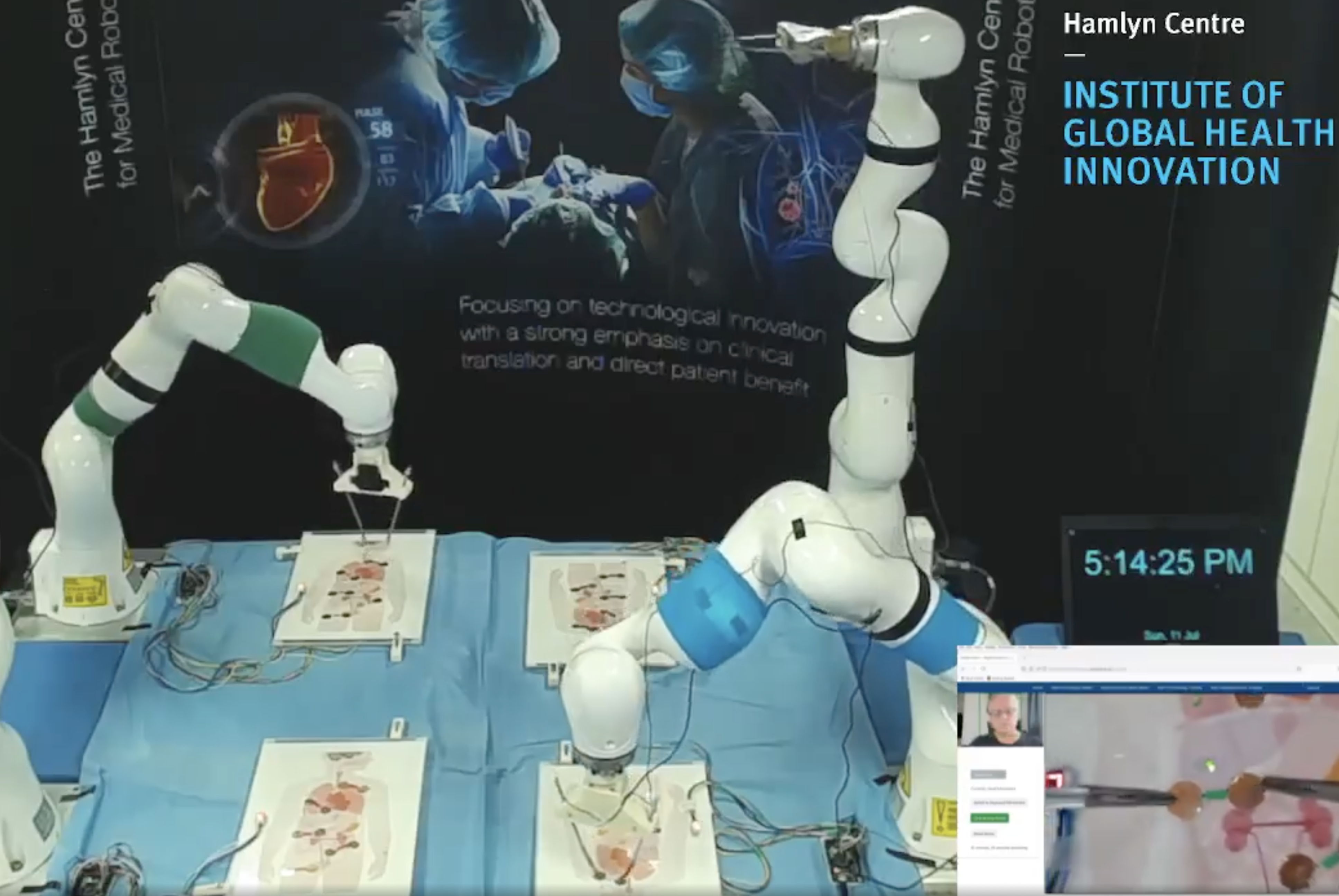

The Royal Society, the world's oldest scientific academy, has held an annual Science Summer Exhibition since 1778. This year, due to the COVID-19 pandemic, this was made a virtual event within which Imperial's HARMS lab showcased their research into minimally invasive Surgery augmented by robots.

I was priviliged to be part of the team that developed the web-application that enabled visitors to remotely use head and eye movements to control the KUKA robots within our labs at Imperial!

I lead the front-end development of the application (predominantly in JavsaScript) where both MediaPipe’s Facemesh and Brown University’s WebGazer APIs were used to interpret the head-movement and gaze-location of users. These interpretations were calculated using data from the user's built-in camera and lightweight machine-learning algorithms, then sent via HTTPS protocols to the Lab PCs at Imperial to be translated into robot movement.

During the Entrepreneurship Module as part of the management aspect in the third year of my degree, I led a team to develop a business plan for execution of a unique idea.

Our idea, Tutti, was a seamless orchestra management application aimed at amateur orchestras. This provided thorough support to orchestras and ensembles of all sizes in areas ranging from player attendance to full scale concert arrangment.

We personally reached out to over 100 orchestras across London, interviewing a diverse range of group, to really understand what they felt was missing and how we could add value. Additionally, we conducted several focus groups to develop an appropriate subscription based platform which would within budget - particularly for smaller ensembles. The subscription's enabled music groups to select features that would benefit them, for example, small recreational groups would not require large concert management as compared to larger groups.

After successful execution of a business plan and pitch video, we were awarded the runners up prize in the funding round - achieving a £500 investment to begin developing our idea. Additionally, we acheived the Engineers in Business Fellowship with Imperial College Business School.

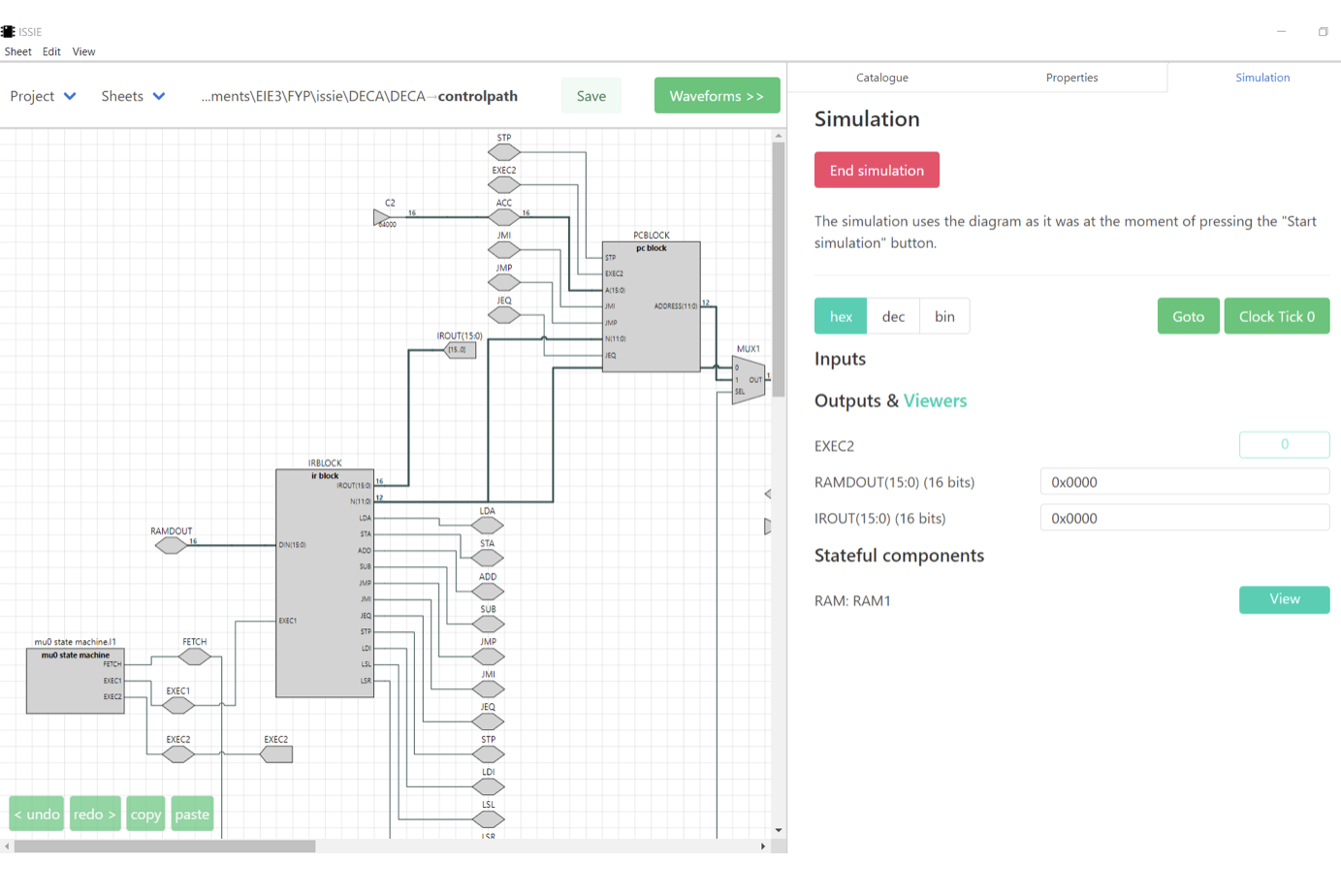

As part of the High Level Programming Module in F#, we helped build ISSIE - Interactive Schematic Simulator with Integrated Editor. This is an interactive digital system design simulator similar to Quartus in F#, using Fable to compile F# into Javascript and Electron to convert the web-app into a cross platform application.

As part of the front end development, developed the user interactions to the application using the Elmish Model View Update. This involved implementation of an intuitive user interface, which I modelled on the highly human-centered MacOS.

ISSIE was used this year at Imperial to teach First Year Students about circuits and allow modelling of complete schematics.

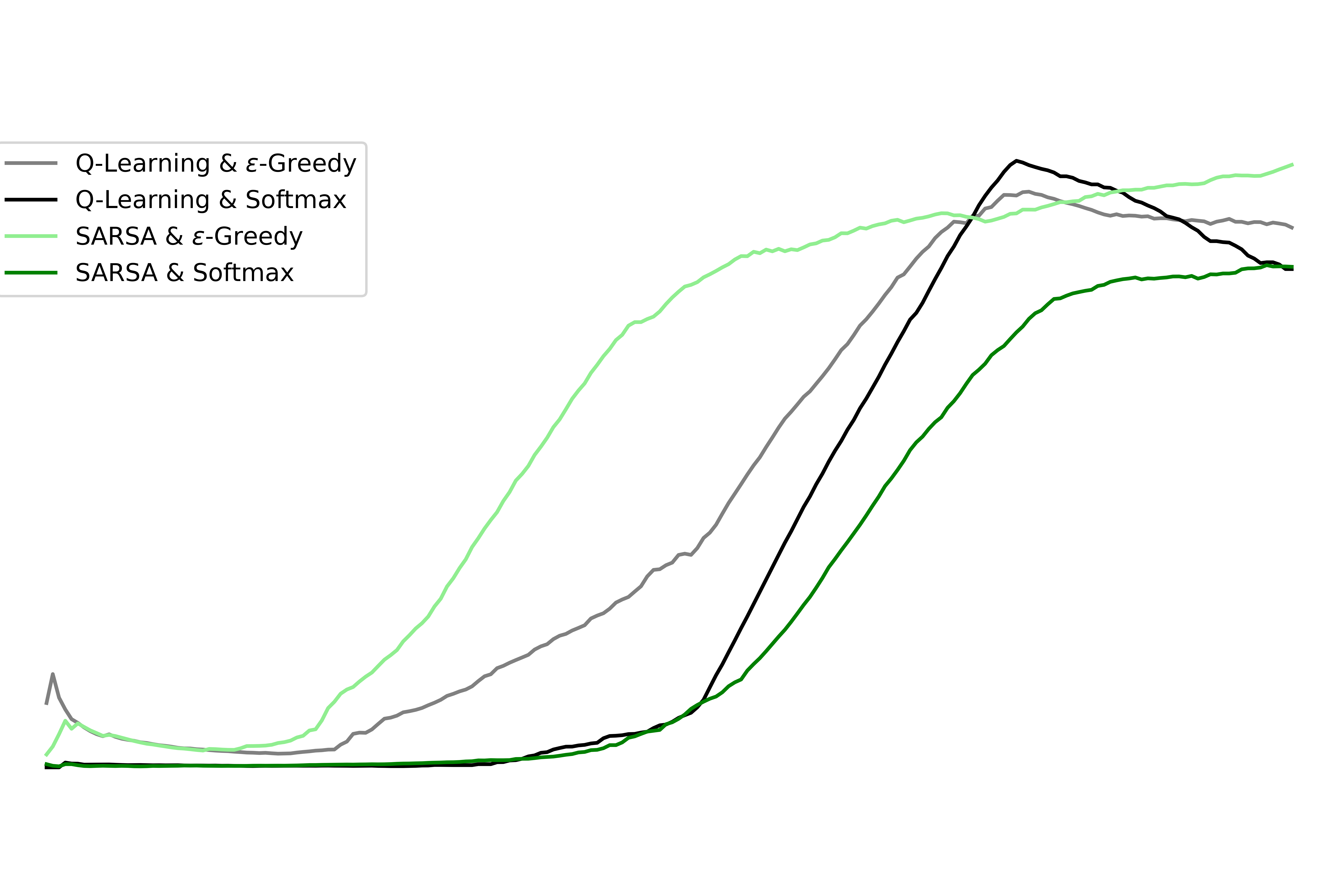

As part of the Deep Learning Module in my Third Year, I investigated and compared various techniques used in Deep Learning. To consolidate my findings, I produced concise reports that targeted the key discrepencies between methods and variables, enabling readers to quickly get a strong understand of effective ways to implement the models in various situations.

The research spanned over several models, from natural language processing (NLP) to convolution for image processing. This research was conducted using several deep-learning modules in Python, including Tensorflow, Keras and MatPlotLib which provided a breadth of development in my software engineering skills. The implementation of these algorithms were executed in Google Collaboratory enabling use of Google GPU's to speed up training and test times.

An account of my findings can be found here: https://github.com/udai-arneja/DeepLearning